Decide When to Stop

When the end of the cycle approaches, the techniques we covered so far will put the team in a good position to finish and ship. The shaped work gave them guard rails to prevent them from wandering. They integrated one scope at a time so there isn’t half-finished work lying around. And all the most important problems have been solved because they prioritized those unknowns first when they sequenced the work.

Still, there’s always more work than time. Shipping on time means shipping something imperfect.

There’s always some queasiness in the stomach as you look at your work and ask yourself: Is it good enough? Is this ready to release?

Compare to baseline

Designers and programmers always want to do their best work. It doesn’t matter if the button is on the center of the landing page or two pages down a settings screen, the designer will give it their best attention. And the best programmers want the code base to feel like a cohesive whole, completely logically consistent with every edge case covered.

Pride in the work is important for quality and morale, but we need to direct it at the right target. If we aim for an ideal perfect design, we’ll never get there. At the same time, we don’t want to lower our standards. How do we make the call to say what we have is good enough and move on?

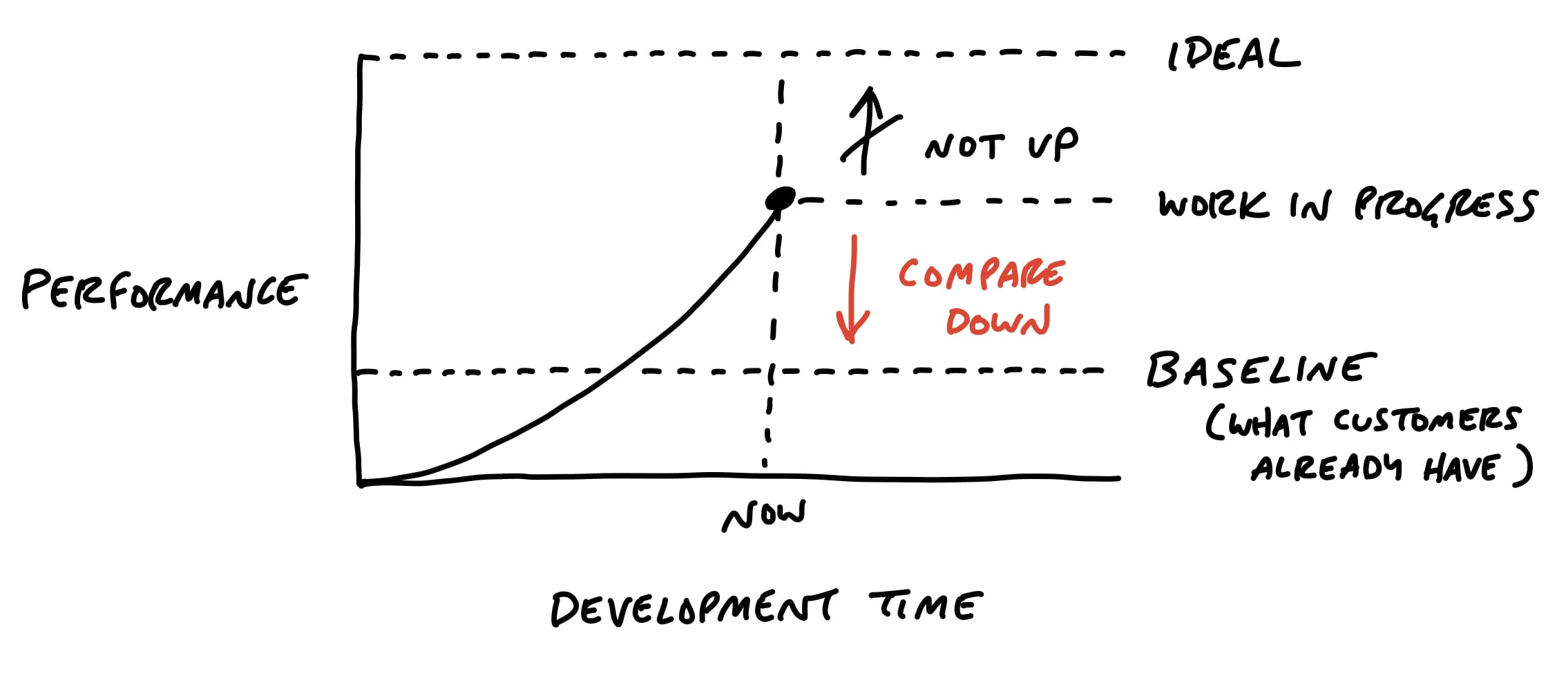

It helps to shift the point of comparison. Instead of comparing up against the ideal, compare down to baseline—the current reality for customers.

How do customers solve this problem today, without this feature? What’s the frustrating workaround that this feature eliminates? How much longer should customers put up with something that doesn’t work or wait for a solution because we aren’t sure if design A might be better than design B?

Seeing that our work so far is better than the current alternatives makes us feel better about the progress we’ve made. This motivates us to make calls on the things that are slowing us down. It’s less about us and more about value for the customer. It’s the difference between “never good enough” and “better than what they have now.” We can say “Okay, this isn’t perfect, but it definitely works and customers will feel like this is a big improvement for them.”

Make scope cuts by comparing down to baseline instead of up to some perfect ideal

Limits motivate trade-offs

Recall that the six-week bet has a circuit breaker—if the work doesn’t get done, the project doesn’t happen.

This forces the team to make trade-offs.

When somebody says “wouldn’t it be better if…” or finds another edge case, they should first ask themselves: Is there time for this? Without a deadline, they could easily delay the project for changes that don’t actually deserve the extra time.

We expect our teams to actively make trade-offs and question the scope instead of cramming and pushing to finish tasks. We create our own work for ourselves. We should question any new work that comes up before we accept it as necessary.

Scope grows like grass

Scope grows naturally. Scope creep isn’t the fault of bad clients, bad managers, or bad programmers. Projects are opaque at the macro scale. You can’t see all the little micro-details of a project until you get down into the work. Then you discover not only complexities you didn’t anticipate, but all kinds of things that could be fixed or made better than they are.

Every project is full of scope we don’t need.

Every part of a product doesn’t need to be equally prominent, equally fast, and equally polished. Every use case isn’t equally common, equally critical, or equally aligned with the market we’re trying to sell to.

This is how it is. Rather than trying to stop scope from growing, give teams the tools, authority, and responsibility to constantly cut it down.

Cutting scope isn’t lowering quality

Picking and choosing which things to execute and how far to execute on them doesn’t leave holes in the product. Making choices makes the product better. It makes the product better at some things instead of others. Being picky about scope differentiates the product. Differentiating what is core from what is peripheral moves us in competitive space, making us more alike or more different than other products that made different choices.

Variable scope is not about sacrificing quality.

We are extremely picky about the quality of our code, our visual design, the copy in our interfaces, and the performance of our interactions. The trick is asking ourselves which things actually matter, which things move the needle, and which things make a difference for the core use cases we’re trying to solve.

Scope hammering

People often talk about “cutting” scope.

We use an even stronger word—hammering—to reflect the power and force it takes to repeatedly bang the scope so it fits in the time box.

As we come up with things to fix, add, improve, or redesign during a project, we ask ourselves:

- Is this a “must-have” for the new feature?

- Could we ship without this?

- What happens if we don’t do this?

- Is this a new problem or a pre-existing one that customers already live with?

- How likely is this case or condition to occur?

- When this case occurs, which customers see it? Is it core—used by everyone—or more of an edge case?

- What’s the actual impact of this case or condition in the event it does happen?

- When something doesn’t work well for a particular use case, how aligned is that use case with our intended audience?

The fixed deadline motivates us to ask these questions. Variable scope enables us to act on them.

By chiseling and hammering the scope down, we stay focused on just the things we need to do to ship something effective that we can be proud of at the end of the time box.

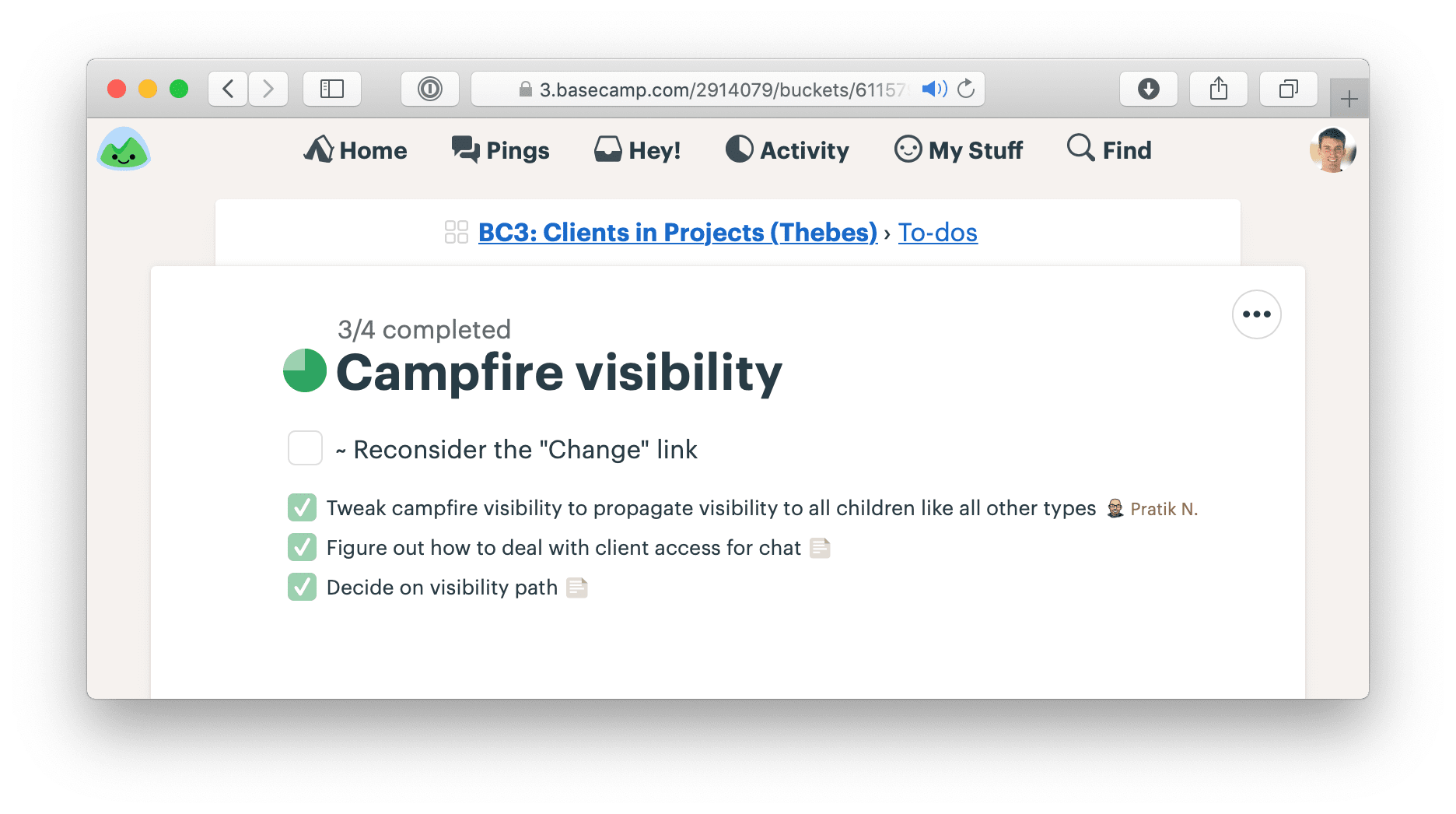

Throughout the cycle, you’ll hear our teams talking about must-haves and nice-to-haves as they discover work. The must-haves are captured as tasks on the scope. The scope isn’t considered “done” until those tasks are finished. Nice-to-haves can linger on a scope after it’s considered done. They’re marked with a tilde (~) in front. Those tasks are things to do if the team has extra time at the end and things to cut if they don’t. Usually they never get built. The act of marking them as a nice-to-have is the scope hammering.

A finished scope with one nice-to-have (marked with a “~”) that was never completed

QA is for the edges

At Basecamp’s current size (millions of users and about a dozen people on the product team), we have one QA person. They come in toward the end of the cycle and hunt for edge cases outside the core functionality.

QA can limit their attention to edge cases because the designers and programmers take responsibility for the basic quality of their work. Programmers write their own tests, and the team works together to ensure the project does what it should according to what was shaped.

This follows from giving the team responsibility for the whole project instead of assigning them individual tasks (see Chapter 9, Hand Over Responsibility).

For years we didn’t have a QA role. Then after our user base grew to a certain size, we saw that small edge cases began to impact hundreds or thousands of users in absolute numbers. Adding the extra QA step helped us improve the experience for those users and reduce the disproportional burden they would create for support.

Therefore we think of QA as a level-up, not a gate or a check-point that all work must go through. We’re much better off with QA than without it. But we don’t depend on QA to ship quality features that work as they should.

QA generates discovered tasks that are all nice-to-haves by default. The designer-programmer team triages them and, depending on severity and available time, elevates some of them to must-haves.

The most rigorous way to do this is to collect incoming QA issues on a separate to-do list. Then, if the team decides an issue is a must-have, they drag it to the list for the relevant scope it affects. This helps the team see that the scope isn’t done until the issue is addressed.

We treat code review the same way. The team can ship without waiting for a code review. There’s no formal check-point. But code review makes things better, so if there’s time and it makes sense, someone senior may look at the code and give feedback. It’s more about taking advantage of a teaching opportunity than creating a step in our process that must happen every time.

When to extend a project

In very rare cases, we’ll extend a project that runs past its deadline by a couple weeks.

How do we decide when to extend a project and when to let the circuit breaker do its thing?

First, the outstanding tasks must be true must-haves that withstood every attempt to scope hammer them.

Second, the outstanding work must be all downhill. No unsolved problems; no open questions. Any uphill work at the end of the cycle points to an oversight in the shaping or a hole in the concept. Unknowns are too risky to bet on. If the work is uphill, it’s better to do something else in the next cycle and put the troubled project back in the shaping phase. If you find a viable way to patch the hole, then you can consider betting more time on it again in the future.

Even if the conditions are met to consider extending the project, we still prefer to be disciplined and enforce the appetite for most projects. The two-week cool-down usually provides enough slack for a team with a few too many must-haves to ship before the next cycle starts. But this shouldn’t become a habit.

Running into cool-down either points back to a problem in the shaping process or a performance problem with the team.